At CloudFest 2024, in Rust, Germany, ASUS is exhibiting its server solutions this week. The server vendor is showcasing their most recent server innovations at booth #C03, with its ASUS ESC NM1-E1 GPU server serving as the main attraction. Using NVIDIA’s cutting-edge MGX modular reference architecture, this state-of-the-art server would promise the best performance and capabilities, redefining the AI supercomputing space.

ASUS offers an extensive range of server options. With a variety of liquid-cooled rack systems, high-performance GPU servers, and affordable entry-level models, ASUS would meet a wide range of computing demands. In order to achieve optimum performance in large-language-model (LLM) training and inferencing, the ASUS team claims to refine both hardware and software ecosystems with great skill in MLPerf benchmarks. This would allow for the smooth integration of comprehensive AI solutions for the demanding domains of AI supercomputing.

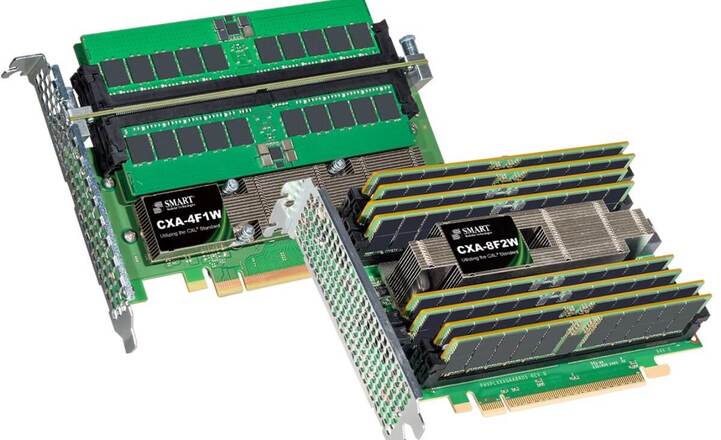

ASUS NVIDIA MGX-powered Server

The newest NVIDIA MGX-powered 2U server from ASUS, the ESC NM1-E1, has the NVIDIA GH200 Grace Hopper Superchip, which would deliver excellent efficiency and performance. Driven by NVIDIA NVLink-C2C technology, the NVIDIA Grace CPU has 72 Arm Neoverse V9 CPU cores with Scalable Vector Extensions (SVE2).

ASUS MGX-powered servers, which combine with NVIDIA BlueField-3 DPUs and ConnectX-7 network adapters to provide a whooping 400 Gb/s data throughput, would enable enterprise AI development and deployment. When paired with NVIDIA AI Enterprise, an end-to-end, cloud-native software platform for creating and deploying enterprise-grade AI applications, ESC NM1-E1 would deliver the highest flexibility and scalability for AI-driven data centers, HPC, data analytics, and NVIDIA Omniverse applications.

- story continues below the photo - ASUS GH 200

ASUS GH 200

Compact GH200 1U Server for AI and HPC

The ASUS ESR1-511N-A1 server, housed in a 1U chassis of 800 mm, is equipped with an NVIDIA GH200 Grace Hopper Superchip, which facilitates HPC and deep learning (DL) training and inference.

The most recent NVIDIA Superchip would offer a coherent memory pool, high bandwidth, and low latency thanks to NVIDIA NVLink C2C technology. Its small size would allow for great density, superior scalability, and ideal rack layout for an easy integration with the current IT infrastructure.

NVIDIA HGX H100 eight-GPU server

Using eight NVIDIA H100 Tensor Core GPUs and two AMD EPYC 9004 CPUs, the ASUS ESC N8A-E12 server is a powerful 7U dual-socket server. It is designed to drive the development of AI and data science, providing a special one-GPU-to-one-NIC configuration for optimal throughput in compute-intensive activities.

The ESC N8A-E12 server, with its cutting-edge cooling techniques and innovative components, would be a model of thermal efficiency, scalability, and ground-breaking performance. According to ASUS itself, it will drastically reduce operating expenses.

Enterprise AI Applications

The ASUS ESC8000A-E12P server was created to satisfy the rigorous needs of enterprise AI applications. This enterprise server would incorporate fast GPU interconnects, high-bandwidth fabric, and top-tier GPUs.

This robust server, which can accommodate up to eight dual-slot GPUs, can be configured in a scalable manner to handle a wide range of workloads. The server also offers the option of AMD Infinity Fabric Link or NVIDIA NVLink Bridge, which can improve performance scaling. The enterprise server is prepared to perform exceptionally well in both AI and HPC environments, according to ASUS.

Removing RAID Bottlenecks for Peak SSD Performance

With its integration of SupremeRAID by Graid Technology and AMD EPYC 9004 CPUs, the ASUS RS720A-E12-RS24 server would come with incredible throughput, low latency, and great scalability. The BeeGFS parallel file system, which brings scalability and performance, works in tandem with RS720A-E12-RS24 to eliminate the traditional RAID bottleneck and maximize SSD performance without using CPU cycles. This collaborative effort between ASUS and Graid Technology would combine their respective strengths to provide storage solutions with the highest efficiency and reliability.

In addition, this powerful server has 128 Zen 4c cores, supports 400 Watt TDP per socket, and has DDR5 memory that runs at up to 4800 MHz. With nine PCIe 5.0 slots and 24 bays for a wide range of disk choices, this server has a lot of room for upgrades. It could be a perfect choice for demanding AI and HPC applications since it has multiple GPU support, superior air cooling, and remote administration capabilities.

Direct-to-Chip Cooling for Multi-Node Solutions

With its four-node architecture and optimizations for cloud, HCI, and CDN applications, the ASUS RS720QA-E12-RS8U server would help improve the performance and flexibility of IT. High-TDP CPUs are managed by their direct liquid cooling, increasing data center efficiency.

Robust server features, like capability for two CPUs, large amounts of memory, and several networking choices, are included in every node. This server, which is intended for HPC data centers, maximizes available space and has direct-to-chip (D2C) cooling options. These features help to reduce PUE and operating expenses while also supporting sustainable energy objectives.

Asus booth

Asus booth